Word Counter Using Producer-Consumer Pattern

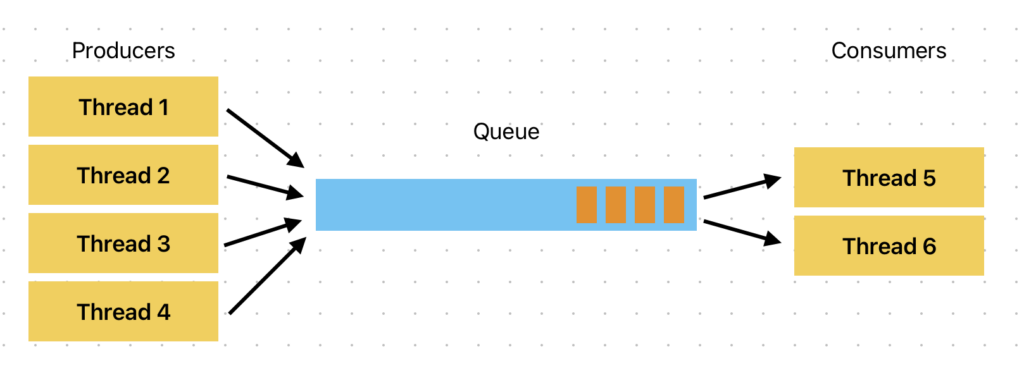

Parallel computing is significant for performance and efficient use of resources. One pattern to use is the Producer-Consumer Pattern. Simply, it means some threads are information creators called the producers, and some are processors, called consumers. This will allow faster processing to reach the desired goal.

In this post, I will be explaining the Producer-Consumer Pattern and then implement a simple program that counts the number of words in a file using Go.

What is a Producer-Consumer Pattern?

This pattern is responsible for efficiently processing data. In a way, the goal is to create a pipeline where different threads take on different roles. A real-life example can be from restaurants. In the back, people cook the various ingredients, and then the front of the kitchen will bring all the ingredients into one presentable plate. Here the cooks are the producers where the process is to take a specific ingredient and cook it, and the consumers are the presenters where ingredients are assembled.

This is quite useful if continuous data is streamed or large enough making computation time longer when run sequentially. Counting words in a file is a great example. If the file is large enough we will have two different processes spawned to decrease the runtime. The file can be broken down into chunks of bytes and passed to a producer thread which counts the number of words. Then this is given to a consumer which tallies up all the number of words found in chunks.

How is Producer-Consumer different than MapReduce?

MapReduce was developed at Google to handle large amounts of processing and storage. They have implemented and also tested various conditions in their systems. Here is a link to their paper.

It is a superset of this pattern. The difference lies in how the processing occurs. MapReduce has different roles that help improve processing time and store them. It assures reliability in the system. If any node is done it can recover, and redistribute the load. This allows adapting to network load conditions, worker availability, and more possible hurdles.

MapReduce utilizes concurrency on a single device and across multiple devices on a network. There is a master node that manages the orchestration of devices. These will

Word Counter Application in Go

My goal with this small project is to demonstrate how to implement a Producer-Consumer Pattern for word counting in Go. The producers will be counting the number of words in a particular chunk and there will be a single consumer which counts the outputted sum into a single value.

What are Go Routines?

In Go, there is a concept called Go Routines which I’ll be utilizing. This is the same as running a thread. The advantage of go routines compared to threading processes in other languages is that they are “virtual threads”. They run in the Go runtime meaning they are not OS threads. This allows Go to be able to detect deadlocks. Another advantage is that they are easy to use and they are lightweight.

Producer-Consumer Pattern Code

First, we define a main function responsible for receiving the file that the words will be counted from, and print the result count. Very simply here is the code:

func main() {

var filename string

flag.StringVar(&filename, "filename", "", "Filename to process")

flag.Parse()

if filename == "" {

fmt.Println("invalid file name")

os.Exit(1)

}

wc, err := countWords(filename)

if err != nil {

fmt.Println(err)

os.Exit(1)

}

fmt.Printf("Words counted: %d\n", wc)

}

File Reading

The part we will be looking at here is the countWords function. Our goal is to check if the file exists by trying to open it and read it in chunks of 512 bytes. This is configurable and a sweet spot can be found by trial and error.

func countWords(filename string) (int, error) {

var count int = 0

fileIn, err := os.Open(filename)

if err != nil {

return 0, err

}

defer fileIn.Close()

reader := bufio.NewReader(fileIn)

chunkSize := 512

Producer Spawning

processChan := make(chan int)

var wg sync.WaitGroup

for {

buf := make([]byte, chunkSize)

numBytes, err := reader.Read(buf)

if err != nil {

fmt.Printf("error reading file: %s\n", err)

return 0, err

}

wg.Add(1)

go mapper(buf, numBytes, processChan, &wg)

if _, err := reader.Peek(1); err == io.EOF {

break

}

}

The next step is to create a channel and go routines to count the words in a given chunk. This will be our producers or in MapReduce, this will be the mappers. To spawn these go routines we run a loop until the file is completely read and producers are spawned.

The wait groups are defined to synchronize all the go routines. Once synchronized the channel has to be closed so that the go routines are not stagnant. This will create a deadlock error. To prevent this, we create a separate go routine in the background to wait for all the producers to end.

go func() {

wg.Wait()

close(processChan)

}()

Gathering All Pieces of Code

Finally, run a consumer, or reducer, to count all the sums from each chunk of data processed then return the total. There is no need for mutexes to manage resource sharing since there is a single consumer. Below are the definitions of the producer and consumers.

func mapper(data []byte, n int, c chan int, wg *sync.WaitGroup) {

defer wg.Done()

chunk := string(data[:n])

c <- len(strings.Fields(chunk))

}

func reducer(count *int, c chan int) {

for num := range c {

*count += num

}

}

The full process is as follows:

- Open File

- Read in chunks of the file

- Spawn a producer Go Routine with the data chunk

- Repeat steps 2 and 3 if the file is not fully read

- Create a Go Routine to wait for all producers to be done and close the channel

- Sum up all word counts in the channel

- Print the sum

The full code can be found on my Github. Check out my Credit Card Validator API post also written in Go.

Thanks for reading this post!

If you’d like to contact me about anything, send feedback, or want to chat feel free to:

Send an email: andy@serra.us

Archives

Calendar

| M | T | W | T | F | S | S |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| 13 | 14 | 15 | 16 | 17 | 18 | 19 |

| 20 | 21 | 22 | 23 | 24 | 25 | 26 |

| 27 | 28 | 29 | 30 | 31 | ||

Leave a Reply